Proudly sponsored by ConstructAI, brought to you by Weston Analytics.

Hello Project AI enthusiasts,

This week reveals the tectonic plates shifting beneath the AEC industry. AECOM just bought an AI startup for nearly $400 million, not licensing their tech, but acquiring the capability itself. Meanwhile, major insurers are formally excluding AI liabilities from policies, and 81% of UK construction professionals admit they lack the knowledge to deploy AI responsibly. The gap between AI adoption and AI competence has never been more apparent, or more costly.

In This Edition

Flux check-in

When Big Consulting Becomes a Tech Company: AECOM's $390M Bet on AI Engineering

Global infrastructure giant AECOM just rewrote the playbook for professional services firms. Their $390 million acquisition of Norwegian AI startup Consigli isn't just another corporate deal, it's a fundamental realignment of how traditional consulting firms view their own survival. Instead of licensing AI tools like everyone else, AECOM bought the team, the IP, and the capability itself.

This isn't incremental. This is strategic repositioning. Consigli's "Autonomous Engineer" platform claims 90% reduction in engineering time for early-stage work, space planning, MEP loadings, BIM modelling, tender prep. That's not efficiency gains. That's restructuring. Read the full breakdown →

What Does This Mean for Me?

If your firm relies on the same commercial tools as competitors, you've got no differentiation. If your consultancy outsourced its AI strategy, competitors can replicate your approach tomorrow. AECOM's move reveals the uncomfortable truth: owning AI capability matters more than accessing it.

Key Themes

Competitive Fear: AECOM feared competitors would "effectively put us out of business" without this move

Strategic Divestment: The firm is now reviewing divestment of construction management to focus on AI-augmented advisory

Labour Economics: 90% time reduction means 40-person design teams become 4-person teams

Market Disruption: When customers start acquiring vendors' targets, the entire software distribution model breaks

Down the Rabbit Hole

Claude Opus 4.5: The Benchmark Arms Race That Stopped Mattering

Anthropic announced Claude Opus 4.5 this week, breaking 80% on SWE-Bench Verified. The industry called it a breakthrough. But here's Project Flux's contrarian take: by the time you finish evaluating whether Opus 4.5 is "better" than competitors, OpenAI will have released something new and reshuffled the rankings again.

The real story isn't benchmark performance. It's that Opus 4.5 costs 67% less than Opus 4 whilst using 48-76% fewer tokens. That economic shift matters far more than synthetic test scores. Read the full breakdown →

What Does This Mean for Me?

Stop optimising for "best model." The model that wins this month loses next month. Build AI architecture flexible enough to swap models quarterly without rebuilding everything. Token efficiency and price-to-capability ratio are your only sustainable competitive advantages.

Key Themes

Model Velocity: OpenAI, Google, and Anthropic release major updates every 8-12 weeks, competitive advantage evaporates monthly

Economic Efficiency: Model that's 95% as capable but costs 67% less is superior for production deployment

Real-World Performance: Teams report tasks "impossible for Sonnet 4.5" now work with Opus 4.5

Architecture Flexibility: The only strategic question: can you swap models without rewriting your entire implementation?

Down the Rabbit Hole

The Insurance Industry Is Quietly Walking Away From AI Risk

AIG, Great American Insurance Group, and WR Berkley have formally asked state regulators for permission to exclude AI-related liabilities from commercial insurance policies. The industry that prices risk for a living has concluded AI risk is too unpredictable, too opaque, and too difficult to price using traditional underwriting.

Translation: they're saying, "This category of risk is outside our capacity to manage." For companies deploying AI without proper governance, this is the safety net disappearing. Read the full breakdown →

What Does This Mean for Me?

Your traditional commercial insurance may no longer cover AI-related losses. WR Berkley's proposed "absolute AI exclusion" removes coverage for chatbots, automated decision systems, generative content tools, and algorithmic compliance systems. That's exactly where most organisations have already deployed AI.

Key Themes

Failed Protections: Google AI Overview generated $110M in false legal advice; Air Canada's chatbot created non-existent discounts

Correlated Risk: AI introduces correlated risk where one faulty model affects thousands of customers simultaneously

Regulatory Response: 21+ US states adopted NAIC Model Bulletin requiring board-level AI oversight and bias testing

Insurance Evolution: Specialist AI insurance exists but requires detailed audits, strict governance, and costs significantly more

Down the Rabbit Hole

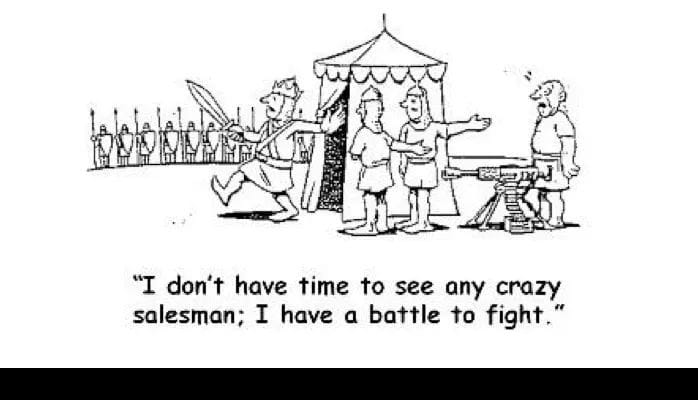

The Construction Industry's AI Crisis Isn't Technology, It's Knowledge

Recent research from the AI Institute reveals the brutal reality: 81% of UK construction professionals lack foundational knowledge to deploy AI responsibly. Nearly half cite lack of training as the primary blocker. Yet firms are already deploying AI tools in project management, cost estimation, risk assessment, and autonomous equipment.

This is exactly backwards. You're supposed to understand a tool before deploying it widely. Construction is doing the opposite. Read the full breakdown →

What Does This Mean for Me?

Shadow AI is already happening, people using ChatGPT, Claude, and Gemini on projects without official oversight. They're drafting specs, analysing site photos, estimating costs. Some outputs look plausible but are fundamentally flawed. Nobody catches the problems until they cause damage on site.

Key Themes

Awareness Gap: One in five construction professionals haven't identified which processes could be automated

Implementation Failure: Research shows "doomed to fail" approach of implementing tools without investing in organisational knowledge first

Trust Without Understanding: Teams using AI without understanding limitations will trust outputs when they shouldn't

Success Patterns: Firms starting with knowledge-building have substantially higher AI implementation success rates

Down the Rabbit Hole

UK Government Commits £24B to AI, What Actually Gets Built Matters More Than the Promise

The UK government announced £24.25 billion in new AI investment commitments, bringing total promises since taking office to £78 billion. A Sovereign AI Unit. Free compute for UK researchers. South Wales growth zone targeting 5,000 AI jobs. Infrastructure investments in power and data centres.

The headline figures are impressive. The fundamental question remains: will this actually translate into economic growth, or join the long list of government technology initiatives that promise transformatively but deliver incrementally? Read the full breakdown →

What Does This Mean for Me?

Free compute access for researchers creates resources that didn't exist before. Infrastructure investment in South Wales lowers facility costs. A sovereign AI unit creates potential customer base for UK AI companies. But none of this translates into strategy recommendations until investments actually materialise.

Key Themes

Investment Breakdown: £137M for AI for Science, £500M for Sovereign AI Unit, £250M in free compute time

Sovereign Strategy: Focus on sovereign capability, building UK-based AI rather than adopting American tools

Accountability Test: Watch whether UK government departments actually use their own AI systems versus defaulting to American cloud providers

Delivery Risk: Between commitment and delivery lies the valley of execution risk

Down the Rabbit Hole

The pulse check

Tips of the week

Become an Effective "Vibe Coder" – Practical Tips for Building Better with AI

AI coding tools like Cursor, Claude Code, Anti Gravity and Windsurf allow non-developers to create dashboards and internal applications, but many people still struggle because the AI loses context and unintentionally breaks earlier work. Effective vibe coding is not about becoming a software engineer overnight. It is about giving the AI the right guidance so it can build consistently without causing avoidable issues.

You can achieve this by giving your project a stable memory system. Cursor users can set up a cursorrules file, and Claude users can create reusable Skills that define design standards, code structure, component choices and preferred patterns. Building layer by layer also keeps the process stable because each step is tested before adding the next.

Core practices include:

Use cursorrules or Claude Skills to maintain persistent context

Build in layers: first layout, then data, then functionality, then enhancements

Test each layer before moving on

Keep styling, structure and components consistent throughout

Once your tool works locally, deployment becomes very simple. Platforms like Netlify and Vercel connect directly to GitHub, publish your project within minutes, and allow quick iteration without dealing with complex infrastructure. This approach helps teams build practical internal tools that work reliably and can be improved continuously.

Need help structuring your prompts? Try my free Prompt Generator. Describe your task, select parameters (intent, tone, length), and get a structured prompt ready to paste into ChatGPT, Claude, or Perplexity in 30 seconds.

If you would like a deeper, structured learning path, here is the Referral Link for the Course

Governance & Security

This week brought critical developments in AI governance that project delivery teams need to understand. Anthropic CEO Dario Amodei will testify before the House Homeland Security Committee on 17th December following the first reported AI-orchestrated cyber-espionage campaign, the committee commended Anthropic for self-reporting the attack. This marks a significant moment in AI security governance, with Google Cloud and Quantum Xchange CEOs also invited to participate.

Meanwhile, security researchers warned that cracked AI hacking tools will likely flood cybercriminal forums in 2026, enabling attackers to discover vulnerabilities in minutes instead of days. This represents significant escalation in the cyber threat landscape as AI capabilities become accessible to criminal actors.

On the regulatory front, the Trump administration might not fight state AI regulations after all, signalling potential shift in federal-state dynamics around AI governance. Additionally, Partnership on AI published new guidance on protecting data workers, highlighting human rights considerations in AI development supply chains.

The message is clear: AI governance isn't optional anymore. Whether you're deploying AI internally or working with AI-powered vendors, understanding the evolving regulatory and security landscape is essential for managing risk effectively.

Robotics

Tesla is bleeding AI talent to a small new robotics start-up – Tesla’s AI and robotics divisions are facing a significant “brain drain” as a stealth startup called Sunday Robotics emerges with a roster of engineers from Tesla’s Optimus and Autopilot teams. Read the story

Home Robot Clears Tables and Loads the Dishwasher All by Itself – Sunday Robotics has a new way to train robots to do common household tasks. The startup plans to put its fully autonomous robots in homes next year. Explore Sunday Robotics

Alibaba launches US$537 Quark AI glasses – Sunday Robotics has a new way to train robots to do common household tasks. The startup plans to put its fully autonomous robots in homes next year. Read more

Social and companion robots: More than just machines? – Social and companion robots occupy a different space in the automation landscape. Instead of replacing physical labour, they augment human relationships, taking on roles traditionally associated with caregivers, teachers, receptionists, and even friends. Read more

Trending Tools and Model Updates

Perplexity AI’s new clothes try-on tool takes on Google – Perplexity’s virtual try-on lets you see clothes on a digital avatar made from your own photo, and the tool realistically shows how fabrics drape and fit, making online shopping feel more accurate. Read the full story

Replit Design Agent for UI Creation – Replit launched Design agent that creates stunning UIs from text descriptions. Features modern, clean designs with clear layout, strong visual hierarchy, and intuitive navigation. Includes live preview for desktop and mobile, one-click publish functionality. Try it now

LLM Council: Multi-Model Consensus Tools – Andrej Karpathy released LLM Council which sends questions to four top models, has them grade each other's answers, then "chairman" model writes final response. Represents new approach to leveraging multiple AI models for better outputs. Read the full update

Deep Research Now in NotebookLM – Google's NotebookLM has added Deep Research capabilities, expanding its functionality for comprehensive research tasks with integrated AI assistance. See announcement

Perplexity Adds AI-Powered Shopping Feature With PayPal Checkout – Perplexity offers conversational search that incorporates the user's history and wants when looking for item suggestions. The Perplexity team suggests that the AI is able to understand each shopper's unique needs better than a search algorithm that's optimized for advertiser dollars. Explore the announcement

ChatGPT’s voice mode is no longer a separate interface – ChatGPT’s voice mode is getting more usable. OpenAI announced it is updating the user interface to its popular AI chatbot so users can access ChatGPT Voice right inside their chat, instead of having to switch to a separate mode. Learn more

DeepSeek-Math-V2 Achieves IMO Gold – DeepSeek released DeepSeek-Math-V2, open-source MoE model achieving gold-medal performance at IMO 2025 (International Mathematical Olympiad). Review technical details

Kimi K2 introduced thinking mode that's turning heads in AI community – Represents a new approach to AI reasoning with extended contemplation before responding to details emerging about capabilities and performance. Discover the details

Links We are Loving

Understanding neural networks through sparse circuits — Neural networks power today’s most capable AI systems, but they remain challenging to understand. We don’t write these models with explicit, step-by-step instructions. Instead, they learn by adjusting billions of internal connections, or “weights,” until they master a task.

The Myth of Being “Too Late” for AI: Why Human Contribution Still Matters — An 18-year-old recently asked whether AI will advance so fast that there will be no meaningful work left for him by graduation. This fear is becoming common, but it is powered far more by hype than reality. AI is impressive, yet still deeply limited, and human contribution will remain essential for decades.

The Emerging Agentic Enterprise: How Leaders Must Navigate a New Age of AI — Executives have long relied on simple categories to frame how technology fits into organisations: Tools automate tasks, people make decisions, and strategy determines how the two work together.

Two Paths to Intelligence: Evolutionary Minds and Text-Trained Models — The space of possible intelligences is far broader than the single evolutionary route that shaped animal minds. Large language models arise from a completely different process driven by patterns in human text rather than biological drives.

AI Has Entered the Age of Research, Not Scaling — Ilya Sutskever says we’re now beyond the phase where more compute alone drives major AI gains. Meaningful progress will come from genuine research breakthroughs instead of sheer scaling.

Here's What Happened When I Gave 'Vibe Coding' a Try —Generative AI is changing the way we live and work in a multitude of ways, including coding, and the best AI bots today can debug, refine, and generate code from a simple text prompt.

UAE launches $1 billion ‘AI for Development’ initiative to accelerate progress across Africa — The UAE has unveiled a $1 billion initiative to support and finance artificial intelligence projects across Africa, aiming to strengthen economic and social development by improving digital infrastructure, enhancing government services and boosting productivity.

The Iceberg Index: Measuring Skills-centred Exposure in the AI Economy — Artificial Intelligence is reshaping America's $9.4 trillion labor market, with cascading effects that extend far beyond visible technology sectors.

AI brings new confidence for early career professionals, REB reports — Real Estate Balance's NextGen survey, which had 460 respondents this year, up 25% from the previous year, showed that progress has been made on issues affecting early-career professionals.

Research finds AI in modular buildings can drastically cut energy waste — A study by Aireavu and Portakabin has found that artificial intelligence can cut energy waste by up to 87%.

McKinsey & Co. cut about 200 global tech jobs as the consulting firm joins rivals in using artificial intelligence to automate some positions.

Investors expect AI use to soar. That’s not happening — American statisticians have released new survey findings revealing an important trend hidden in the data. The employment-weighted share of Americans using AI at work has recently dropped by one percentage point, now standing at 11%.

Paul McCartney Releases Silent Track to Protest AI’s Impact on Music — Having spent the majority of his adult life in the music industry, Paul McCartney watched the industry change numerous times. With each new decade, there seemed to be some new style or invention to make the life of a singer, musician, or even songwriter easier.

What to know about a recent Mixpanel security incident — Transparency is important to us, so we want to inform you about a recent security incident at Mixpanel, a data analytics provider OpenAI used for web analytics on the frontend interface for our API product.

Estimating AI productivity gains from Claude conversations — Real conversations with Claude show that AI can greatly boost labour productivity. Using a privacy-preserving analysis of one hundred thousand chats, the study finds that tasks that would normally take about 90 minutes are completed around 80% faster with Claude’s help.

xAI to Build Solar Farm Near Memphis AI Data Center Amid Pollution Concerns — The AI startup xAI, founded by Elon Musk, told Memphis city and county planners last week of its intention to build a solar farm next to Colossus, one of the world’s largest data centres for training artificial intelligence models.

OpenAI cofounder says scaling compute is not enough to advance AI — AI companies have focused on increasing compute by deploying multiple chips or by amassing vast training datasets. Ilya Sutskever, OpenAI cofounder, said there now needs to be an effective way to utilise all that compute, signalling a return to research but with powerful computers.

Google Reportedly Teams Up with MediaTek for 7th-Gen TPU as TSMC Takes on Production — Google is teaming up with Taiwan’s MediaTek to develop its 7th‑generation TPU for 2026 as tech giants race to advance AI chip technology.

Legally embattled AI music startup Suno raises at $2.45B valuation on $200M revenue —Suno, which allows anyone to create AI-generated songs through prompts, announced on Wednesday that it has raised a $250 million Series C round at a $2.45 billion post-money valuation.

Community

The Spotlight Podcast

From Fields to AI: Why the Future of Intelligent Systems Isn't About Prompting Anymore.

This week, we spoke with Johnny Morris, co-founder of Fifth Dimension, about his journey from watching his father transform derelict monasteries to building AI platforms for real estate professionals. Morris delivers a masterclass on why in-house AI development and "vibe coding" won't save your business, and why the shift from prompting to "context engineering" represents the next genuine frontier in AI adoption.

Key insights: Enterprise search implementations consistently fail when they meet real organisational data. Vibe coding is brilliant for proof of concepts but insufficient for production. The organisations advancing furthest understand that the blocker isn't the technology anymore, it's organisational readiness and clear strategy. Morris believes 2026 will reveal the gap between those on actual transformation paths and those still experimenting at the margins.

One more interesting podcast to check out:

Event of the Week

AI & Societal Robustness Conference

12 December 2025 | Jesus College, Cambridge, UK,

As AI systems become increasingly integrated into critical infrastructure and key industries, questions of control, oversight, and institutional safeguarding become paramount.

This one-day conference brings together AI safety and security researchers, policymakers, and other institutional stakeholders. Through technical and policy-oriented talks, collaborative workshops, and 1:1s, we will investigate emerging challenges at the intersection of geopolitics, cybersecurity, societal impacts research, and institutional governance in the era of advanced AI systems. Register now

One more thing

This is how people are addressing AI literacy at the moment

That’s it for today!

Before you go we’d love to know what you thought of today's newsletter to help us improve The Project Flux experience for you.

See you soon,

James, Yoshi and Aaron—Project Flux

1